Measurement Interdisciplinary Research And Perspectives

Indicators of interdisciplinarity are increasingly requested. Yet efforts to brand aggregate indicators have failed due to the diversity and ambivalence of understandings of interdisciplinarity. Instead of universal indicators, we propose a contextualised process of indicating interdisciplinarity.

Interdisciplinary research for addressing societal issues

In this blog I volition share some thoughts developed for and during a fantastic workshop (available here) held last October past the US National Academies to assistance the National Scientific discipline Foundation (NSF) gear up an agenda on how to meliorate measure and assess the implications of interdisciplinarity (or convergence) for research and innovation. The event showed that interdisciplinarity is becoming more prominent in the face of increasing demands for science to address societal challenges. Thus, policy makers beyond the globe are asking for methods and indicators to monitor and assess interdisciplinary inquiry: where it is located, how it evolves, how it supports innovation.

Yet the wide diversity of (sometimes divergent) presentations in the workshop supported the view that policy notions of interdisciplinarity are too various and too complex to be encapsulated in a few universal indicators. Therefore, I argue here that strategies to assess interdisciplinarity should be radically reframed – abroad from traditional statistics towards contextualised approaches. Thus, I propose to follow contempo publications in proposing two different, but complementary, shifts:

From indicators to indicating:

- An cess of specific interdisciplinary projects or programs for indicating where and how interdisciplinarity develops every bit a process, given the detail understandings relevant for the specific policy goals.

From interdisciplinarity to knowledge portfolios:

- An exploration of enquiry landscapes for addressing societal challenges using a portfolio assay approach, i.e. based on mapping of the distribution of noesis and the variety of pathways that may contribute to solving a societal issue – interdisciplinarity.

Both strategies reflect the notion of directionality in research and innovation, which is gaining concord in policy. Namely, in order to value intellectual and social contributions of enquiry, analyses need to go beyond quantity (scalars: unidimensional indicators) and to accept into account the orientations of the enquiry contents (vectors: indicat ing and distributions).

The failure of universal indicators of interdisciplinarity

In the last decade, at that place accept been multiple attempts to come up upwards with universal indicators based on bibliometric data. For example, in the US, the 2010 Scientific discipline & Engineers Indicators (p. 5-35) reported a study commissioned by the NSF to SRI International which ended that information technology was premature 'to identify one or a small set of indicators or measures of interdisciplinary research… in function, considering of a lack of agreement of how electric current attempts to measure conform to the actual process and practice of interdisciplinary research'.

In 2015, the Great britain inquiry councils deputed two contained reports to assess and compare the overall degree of interdisciplinarity across countries. The Elsevier report produced the unforeseen consequence that People's republic of china and Brazil were more interdisciplinary than the UK or the US – which I interpret as an artefact of unconventional (rather than interdisciplinary) citation patterns of 'emergent' countries. A Digital Scientific discipline report, with a more reflective and multiple perspective approach, was interestingly titled: 'Interdisciplinary Research: Exercise We Know What We Are Measuring?' and ended that:

…choice of information, methodology and indicators can produce seriously inconsistent results, despite a common set of disciplines and countries. This raises questions about how interdisciplinarity is identified and assessed. It also reveals a disconnect between the enquiry metadata that analysts typically use and the enquiry action they assume they accept analysed. The report highlights issues around the responsible use of 'metrics' and the importance of analysts clarifying the link between indicators and policy targets.

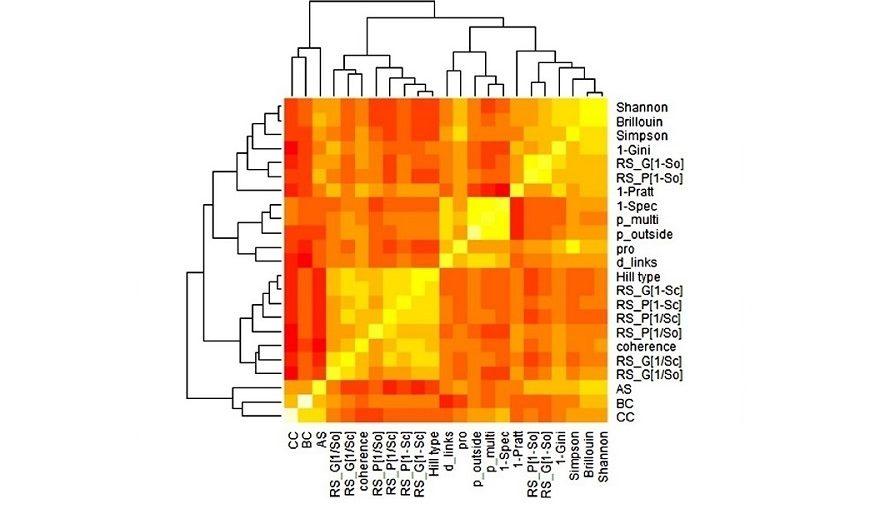

Wang and Schneider, in a quantitative literature review 'corroborate[d] recent claims that the current measurements of interdisciplinarity in science studies are both confusing and unsatisfying' and thus 'question[ed] the validity of electric current measures and argue[d] that we do non demand more of the same, but rather something different in order to be able to measure the multidimensional and complex construct of interdisciplinarity'.

A broader review on evaluations of interdisciplinarity by Laursen and colleagues also found a striking variety of approaches (and indicators) depending on the contexts, purpose and criteria of the assessment. They highlighted a lack of 'rigorous evaluative reasoning', i.e. insufficient clarity on how criteria behind indicators relate to the intended goals of interdisciplinarity.

These critiques practise not mean that i should disregard and mistrust the many studies of interdisciplinarity that utilise indicators in sensible and useful ways. The critiques point out that the methods are non stable or robust enough, or that they merely illuminate a item aspect. Therefore, they are valuable but only for specific contexts or purposes.

In summary, the failed policy reports and the findings of scholarly reviews suggest that universal indicators of interdisciplinarity cannot exist meaningfully adult and that, instead, we should switch to radically different analytical approaches. These results are rather humbling for people like myself who worked on methods for 'measuring' interdisciplinarity for many years. Notwithstanding they are consequent with critiques to conventional scientometrics and efforts towards methods for 'opening up' evaluation, as discussed, for case, in 'Indicators in the wild'.

From indicators to indicating of interdisciplinarity

Does it make sense, then, to endeavour to assess the degree of interdisciplinarity? Yes, it may brand sense as far as the evaluators or policy makers are specific virtually the purpose, the contexts and the particular understandings of interdisciplinarity that are meaningful in a given project. This means stepping out of the traditional statistical condolement zone and interacting with relevant stakeholders (scientists and cognition users) well-nigh what type of cognition combinations brand valuable contributions –acknowledging that actors may differ in their understandings.

Making a virtue out of necessity, Marres and De Rijcke highlight that the ambiguity and situated nature of interdisciplinarity let for 'interesting opportunities to redefine, reconstruct, or reinvent the use of indicators', and propose a participatory, abductive, interactive approach to indicator development. In opening up in this way the processes of measurement, they bring about a spring in framing: from indicat ors (as closed outputs) to indicat ing (every bit an open process).

Marres and De Rijcke'south proposal may not come as a surprise to project evaluators, who are used to choosing indicators only later on situating the evaluation and choosing relevant frames and criteria – i.east., in fact evaluators are used to indicat ing . But this approach means that aggregated or averaged measures are unlikely to be meaningful.

In an ensuing blog, I will debate, however, that in order to reflect on knowledge integration to address societal challenges, we should shift from a narrow focus on interdisciplinarity towards broader explorations of research portfolios.

Header image: "Kaleidoscope" by H. Pellikka is licensed under CC By-SA three.0.

Measurement Interdisciplinary Research And Perspectives,

Source: https://www.leidenmadtrics.nl/articles/on-measuring-interdisciplinarity-from-indicators-to-indicating

Posted by: brechtthenery59.blogspot.com

0 Response to "Measurement Interdisciplinary Research And Perspectives"

Post a Comment